Why B.C. Companies are in Critical Need of Penetration Testing

- Research Papers

Canadian companies are falling behind when it comes to cybersecurity. Over 40% of Canadian businesses reported being the victim of a cyber attack in 2018¹, and that number is expected to rise. Cyber and information security is a topic businesses need to take seriously in order to protect the confidentiality, integrity, and availability of their information, products, and services.

Penetration testing (also known as ethical hacking or white hat hacking) is the process of performing authorized attempts to exploit the security vulnerabilities of a system, and in result finding these vulnerabilities so that they may be patched before they are exploited by a third-party with malicious intent.

Most companies fall short in terms of training and preparation for inevitable attacks and don’t have the resources to thoroughly test the security of their own systems, which is why expert resources are needed.

Penetration testing companies employ the same tools and means of vulnerability discovery as the black hat hackers (or “bad guys”) you want to protect your systems against. By putting your security in the hands of professionals, and preparing for thorough penetration testing, you could potentially save your company money and the stress of recovering from a cyber attack.

Large companies and governments are often most focused on releasing a product on time that meets the functional requirement. For this reason, testing becomes rushed, and security an afterthought. If your business has applications that can be accessed remotely by any method, your business is vulnerable to malicious attacks.

Sources of Vulnerabilities

The vulnerable areas of a web application will be common across any landscape or framework. Some software and solutions will be more vulnerable than others as a result of a number of factors. These lessons are applicable across any solution that is accessible on a network, particularly the internet.

Unsafe Development Practices

Unsanitized Input

Injection attacks, the most common attack vector according to the OWASP top 10², take advantage of unsanitized inputs to back-end systems. Database queries, requests to the API, and front-end input systems must be thoroughly secured to prevent these types of attacks through defensive programming. Teams must write code that ensures user inputs match the anticipated valid data in all circumstances and at all locations where user interactions occur or can potentially occur.

Code within the application itself should be written in a way that ensures functions, classes, and objects are all initialized in a safe manner.

Intercepting and Decrypting User Authentication

With increasing requirements on strict passwords, user login flexibility, social and OAuth systems, more authentication information is being stored than ever before. Nearly every application has a requirement for allowing users to save login sessions. Even if there is no requirement for allowing a user to login without re-entering their password, the stored state of the current session is required for the API to transmit data in any application that has user interaction.

Malicious agents will hijack user authentication sessions through operating system vulnerabilities, browser vulnerabilities, third-party websites, and other sources of malicious software that are outside of the control of your software.

Session design must be handled in a manner that assumes authentication tokens will be intercepted. Encryption, password hashing, and salting are all strategies that form pieces of the overall authentication design that must be developed, but not the complete puzzle. Good authentication systems should consider vulnerabilities of the real-world, such as social engineering, brute force, and other common attack vectors.

Third-Party Library Vulnerabilities

The rise of open-source and software as a service (SaaS) has allowed development teams to move faster than ever. The ability to reuse code, software, and libraries developed for other projects, teams, or by other developers has many benefits. However, the open-source movement does have a cost when the security of the application is considered.

One of the most common repositories of open-source software is the NPM library. In the past, it was meant to mean “Node Package Manager” however all types of framework and software packages are now stored there. In an open response survey conducted by NPM in 2017 over 97% of respondents used open-source software, and 52% of respondents were not satisfied with the tools they had to evaluate their open-source software³.

It is possible to inject dependencies to libraries commonly used by development teams that remain dormant until they reach production, such as not running on HTTPS, localhost, or other network-based means of detection. Often these malicious programs are injected for the purpose of collecting user information, credit card information, or other sensitive data protected through normal encryption means.

Infrastructure and Platform Vulnerabilities

New attack paths occur in platforms at an increasing rate. From 2011 through 2018 the registered Common Vulnerabilities and Exposures (CVE’s) increased by 400%⁴.

For example, the Linux kernel is susceptible to many vulnerabilities, such as denial of service, execution of arbitrary code, and root-level access to the system. Systems must be consistently updated with the latest security patches to resolve these vulnerabilities. Poor patching or non-existent patching management processes will result in software being vulnerable to attacks based on platform or infrastructure.

Faulty Configuration

Many vulnerabilities may arise from the way a system is configured, rather than the code itself. For example, during testing, “dummy” accounts are created in order to log into the application and test the functionality that users would have access to once the application is deployed. Oftentimes these testing accounts have the username and password “admin” or any other generic credentials and have administrator privileges that the testers would use while testing the application. If these elevated accounts are not removed prior to the public release of an application, the general public could have access to them if they are able to guess the generic credentials, and thus have elevated privileges to your system.

This is just one example of a faulty configuration that could lead to an exploit. Other examples of faulty configurations include; elevated file permissions, incorrect web.config, and directory listing on your web application.

Human Error

A safe and secure business incorporates security at every level. A critical aspect of this philosophy is ensuring that your employees cannot be socially engineered. Social engineering is the process of manipulating and misleading someone into providing an attacker with valuable or sensitive information and is responsible for some of the most damaging exploits.

Kevin Mitnick is an example of how dangerous social engineering can be. By calling various tech companies, and impersonating an employee or customer in distress, he was able to garner the sympathy of the employees and convince them to release everything from passwords to source code directly to him. Kevin’s social engineering tactics have earned him the status of one of the greatest hackers to have ever lived.

It is important that your employees are aware of social engineering, and are trained to properly consult and follow the correct procedures when sensitive information is involved. It is also important to employ the Principle of Least Privilege, both in your employees and in software; meaning that an employee or program should have only the privileges it needs in order to perform its duty, and nothing more.

How to Perform Penetration Testing

Our penetration testing process is conducted in accordance with the guidelines outlined in NIST SP 800–115⁵, ensuring a thorough evaluation that will bulletproof your technical resources.

Our process comprises of four distinct phases, adopted from NIST SP 800–115⁵.

- Analysis Prior to the execution of penetration testing, adequate written permission by both the owner of the system, along with any hosting service, must be obtained. The analysis phase is used to gather and organize information needed for the execution of the penetration testing, such as objects to be assessed, threats of interest, and requirements in which the assessment must comply.

- Discovery. The discovery phase involves penetration testing itself. Multiple tools and techniques are used to test the system thoroughly and avoid false negatives. The tools and techniques used for the evaluation will depend on the target system and the type of system that is being tested. Once vulnerabilities are discovered, specialized tools and techniques are used to exploit and verify each individual vulnerability. Once real vulnerabilities are located, and false positives found, work can then be done to remediate the vulnerabilities.

- Remediation. The remediation phase involves isolating the root cause of each vulnerability, and either fixing the vulnerabilities ourselves or making recommendations to your development team on how they can fix them.

- Prevention. Closely related to the remediation phase, the prevention phase involves creating a final security report that outlines the vulnerabilities found, their root causes, and recommendations on their prevention. The security report will incorporate enough detail to educate both on immediate fixes, and how future projects may avoid the same vulnerabilities.

To demonstrate the process, I will explain the results of a penetration test on Damn Vulnerable Node Application (DVNA) by Appsecco. DVNA is a web application we hosted locally for the purpose of penetration testing education and demonstrations. I will test DVNA with Kali Linux Burp Suite, one of many professional pieces of software we use.

Analysis

To initiate a vulnerability scan on Burp Suite, it is important to ensure that the browser the web application will be accessed from is configured to use Burp Suite as a proxy; a certificate must also be generated and installed to the browser. By passing all traffic to and from the site through our proxy, we are performing a “man in the middle” attack, and able to analyze every detail in each piece of information being transmitted.

Once the environment is configured, we navigate the entirety of the web application manually to build a Site Map in Burp Suite. The Site Map is an aggregation of all the resources that the web application is comprised of. During the process of manual navigation, Burp Suite is passively analyzing the network traffic and learning more about the target application in the process. We also employ the use of automated spidering software to crawl wider spanning web application resources. This combination of mapping techniques ensures that our vulnerability scanner has the most accurate representation of the web application to test in the Discovery phase.

Discovery

Once a site map is built for the target application, we can begin to use Burp Suite’s scanner tool to automate a vulnerability discovery process. The scanner tool traverses through the site map and dynamically manipulates the requests sent to the application. This strategic manipulation involves Burp Suite analyzing variable locations in the requests sent, adding a malicious payload to those variable positions, and then sending the malformed requests in quick or obscure succession. By doing so, Burp Suite is able to analyze the server’s responses and detect any errors that may imply a vulnerability.

POST /app/ping HTTP/1.1 Host: localhost:9090 Accept-Encoding: gzip, deflate Accept: */* Accept-Language: en User-Agent: Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0) Connection: close Referer: http://localhost:9090/app/ping Content-Type: application/x-www-form-urlencoded Content-Length: 21 Cookie: connect.sid=s%3A4pJLNS_ZIj9HR5nW_PJb9wJWBB06Qshl.%2FeFEY8NM%2BSaSLJefqv4zHGAJhKoEr280YydwS3dkrlgaddress=1%20Main%20Street%7cecho%20i53d2t4mk0%20h8ror5vwxm%7c%7ca%20%23'%20%7cecho%20i53d2t4mk0%20h8ror5vwxm%7c%7ca%20%23%7c%22%20%7cecho%20i53d2t4mk0%20h8ror5vwxm%7c%7ca%20%23

When a vulnerability scan is complete, a list of vulnerabilities that were found will be generated; each of which with their respective path in the application, and the payloads that were used to expose them. With this knowledge, we are then able to test these issues further, exposing whether they pose a real threat needing to be patched, or only false positives.

Remediation

From this point, the manual review of the found vulnerabilities is performed. The manual review requires experienced professionals to analyze and attempt to exploit the vulnerabilities found by the automated processes. Vulnerabilities may come in the form of SQL injections, cross-site scripting (XSS), buffer overflows, etc. The verification of these vulnerabilities involves the use of real, yet authorized attacks on your system, so it is important to ensure your system has been backed up and is able to recover from potential damage.

The verification process uses a wide array of tools, each of which is specialized in exploiting a specific vulnerability. Burp Suite has an Intruder tool for injecting certain payloads into vulnerable points of the web application. The Intruder tool allows you to pick custom insertion points in the requests and allows to choose from specific built-in payload lists or to add your own.

The remediation of vulnerabilities may either be implemented by our own developers, or by your own team. If you decide that your own team will be responsible, our Security Report discussed in the next section will provide adequate details on how to do so.

Prevention

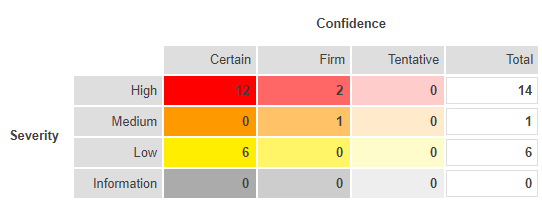

When issues have been manually verified, we then can create a Security Report using Burp Suite. This report will outline each individual issue found, why it is a threat, and give advice on remediation. At the top of the report, a summary is given outlining the severity of issues found, and the confidence level of their legitimacy.

Each issue is presented elegantly with its severity, confidence, and position; along with detailed write-ups on the issue background and steps needing to be taken in order to mitigate the threat. The HTTP requests and responses are also present, with the relevant payload extract highlighted. This report thoroughly outlines all vulnerabilities in the web application and provides background details and remediation suggestions for these vulnerabilities. It is highly recommended that companies take further inquiry into learning about the vulnerabilities in their systems and fix them as soon as they can. The information a company receives from such a report should also lay the foundation for best practice principles in future systems and their respective security issues.

Conclusion

Evidently, cyber and information security is an aspect of operations that every business needs to make a top priority. Proper training of employees is a necessary start for every business, however, the highly technical and critical process of penetration testing your systems should be left to the specialists. Finding a team you can trust, with expert knowledge and tools, is fundamental in preventing the headache of recovering from a cyber attack. A proper team should safely evaluate your systems, and provide professional feedback so that you may secure your current systems. Your business should make security a culture, where continual learning and measures of precaution help you maintain and build systems that are robust and resilient.

About the Author

Chris Holland

Chris employs his skills and experience as a Security Specialist and Software Engineer at AOT Technologies, where he focuses on the analysis, testing, and remediation of web applications. Chris uses state-of-the-art security tools and methods alongside his formal training in cybersecurity and software engineering to prevent valued web applications from falling victim to cyber attacks that can cause significant harm to a companies wellbeing.

References

¹ “Cybersecurity Report — Cira”, https://cira.ca/sites/default/files/public/cybersecurity_report_181015.pdf.

² “OWASP Top 10 — OWASP”, https://www.owasp.org/index.php/Top_10-2017_Top_10.

³ “Attitudes to security in the JavaScript community — npm, Inc. — Medium.” 9 Apr. 2018, https://medium.com/npm-inc/security-in-the-js-community-4bac032e553b.

⁴ “CVSS Severity Distribution Over Time — NVD — NIST.” https://nvd.nist.gov/vuln-metrics/visualizations/cvss-severity-distribution-over-time.

⁵ “NIST SP 800–115, Technical Guide to Information … — NIST Web Site.” https://ws680.nist.gov/publication/get_pdf.cfm?pub_id=152164.